The essential difference between intrinsic and extrinsic approaches is just whether the typing rules are viewed as defining the language, or as a formalism for verifying properties of a more primitive underlying language. It is possible to define an extrinsic semantics on annotated terms simply by ignoring the types ( i.e., through type erasure), as it is possible to give an intrinsic semantics on unannotated terms when the types can be deduced from context ( i.e., through type inference).

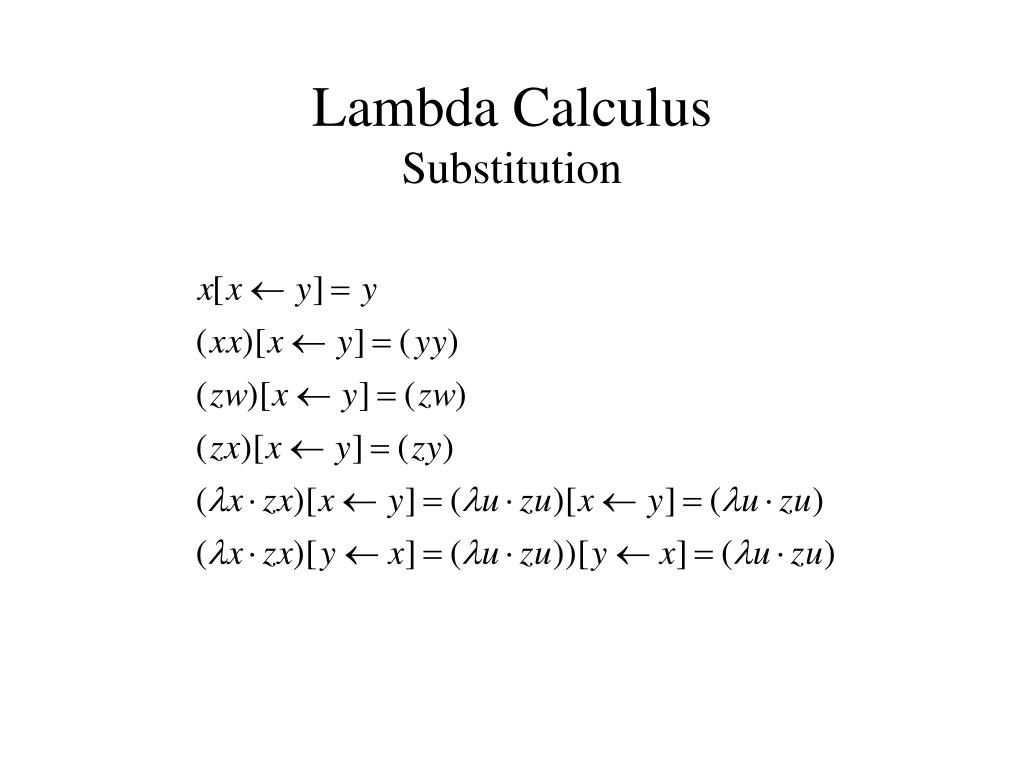

The distinction between intrinsic and extrinsic semantics is sometimes associated with the presence or absence of annotations on lambda abstractions, but strictly speaking this usage is imprecise. A Lisp lambda expression is a type of anonymous function.The simply typed lambda calculus ( λ → ). EDIT: people have asked for some references on the equivalence between the terms 'Simply Typed Lambda Calculus' and 'Simple Type Theory.' When I said that, I didn't mean they were two different systems that admit some technical 'equivalence,' but rather that I have generally seen the two terms used interchangeably to mean the same thing, which is the thing defined in Alonzo Church's 1940 paper. The lambda keyword in Lisp can be understood to refer to the lambda-calculus only through analogy. The 1941 paper referenced by McCarthy seems to be about the typed lambda-calculus, in contradiction to the Wikipedia article's introduction. The Wikipedia article on lambda-calculus has a history of Church's publications. CHURCH, The Calculi of Lambda-Conversion (Princeton University Which we deviate trivially, is given by Church. This distinction and a notation for describing it, from We need a distinction between functions and forms and a notation for express. Because we shall later compute with expressions for functions, Substitutions play an important role in simply typed -calculus, as a key part of the reduction rules, which make this calculus be strongly normalising. It is usual in mathematics - outside of mathematical logic - to use the word “function” imprecisely and to apply it to forms The following paragraph is from page 6:Į. John McCarthy introduced LISP in his April, 1960 paper "Recursive Functions of Symbolic Expressions and Their Computation by Machine, Part I". In summary, Lisp corresponds to an untyped, call-by-value lambda calculus extended with constants. This evaluation order is called "call by value."

This is very different from Lisp which always evaluates arguments to normal form before doing a beta-reduction. (There's a theorem, the Church-Rosser theorem, which says loosely that as long as things terminate, order of evaluation doesn't matter.) In practice lambda terms are typically reduced using leftmost-outermost aka "normal-order" reduction because if any reduction strategy terminates, that one does. However the type of the third argument, the function which uses the two values has to be consistent with the component types. If (a,b) is a pair of values, then the types of a and b can be different. Rules for reducing lambda-calculus terms are highly nondeterministic. Otherwise we would have to switch to typed lambda calculus which we won’t do here. (You can google for the popular "Church encoding" and the less popular "Scott encoding".) Lisp has non-functional data, like atoms and numbers and such, so this would count as "untyped lambda calculus extended with constants."Īnother important difference is in order of evaluation. In the "pure" untyped lambda calculus, everything is coded as functions.

Lisp is a dynamically typed language, would that roughly correspond to untyped lambda calculus?

0 kommentar(er)

0 kommentar(er)